An expert consensus–based checklist for quality appraisal of educational resources on adult basic life support: a Delphi study

Article information

Abstract

Objective

Given the lack of a unified tool for appraising the quality of educational resources for lay-rescuer delivery of adult basic life support (BLS), this study aimed to develop an appropriate evaluation checklist based on a consensus of international experts.

Methods

In a two-round Delphi study, participating experts completed questionnaires to rate each item of a predeveloped 72-item checklist indicating agreement that an item should be utilized to evaluate the conformance of an adult BLS educational resource with resuscitation guidelines. Consensus on item inclusion was defined as a rating of ≥7 points from ≥75% of experts. Experts were encouraged to add anonymous suggestions for modifying or adding new items.

Results

Of the 46 participants, 42 (91.3%) completed the first round (representatives of 25 countries with a median of 16 years of professional experience in resuscitation) and 40 (87.0%) completed the second round. Thirteen of 72 baseline items were excluded, 55 were included unchanged, four were included after modification, and four new items were added. The final checklist comprises 63 items under the subsections “safety” (one item), “recognition” (nine items), “call for help” (four items), “chest compressions” (12 items), “rescue breathing” (12 items), “defibrillation” (nine items), “continuation of CPR” (two items), “choking” (10 items) and “miscellaneous” (four items).

Conclusion

The produced checklist is a ready-to-use expert consensus–based tool for appraising the quality of educational content on lay-rescuer provision of adult BLS. The checklist gives content developers a tool to ensure educational resources comply with current resuscitation knowledge, and may serve as a component of a prospective standardized international framework for quality assurance in resuscitation education.

INTRODUCTION

A lack of knowledge and skills in cardiopulmonary resuscitation (CPR) and related fears of causing harm are major barriers to attempting to resuscitate a person who has suffered a cardiac arrest [1,2]. Effective education in resuscitation improves levels of confidence and the willingness of bystanders to perform CPR in actual cardiac arrest situations [3]. Interventions aimed at increasing the penetration of CPR training in communities reportedly enhance the likelihood of bystanders performing CPR and improve neurological outcomes and survival rates after an out-of-hospital cardiac arrest [4,5]. Training of laypeople in resuscitation is strongly endorsed by the scientific community [6–8].

In spite of the importance of widespread dissemination of resuscitation training, the prevalence of CPR knowledge among the general public is low in many regions of the world [9]. For people who have no opportunity to attend traditional instructor-led CPR training, self-directed CPR learning is currently recommended as a reasonable alternative [6]. Various digital resuscitation-training resources, including videos, online courses, computer games, and smartphone apps, are available for public use. However, studies have shown that the educational content of such resources often does not adhere to resuscitation guidelines based on state-of-theart resuscitation knowledge and evidence-based cardiac arrest management practices [10–12]. Suboptimal guideline compliance has been also revealed in certified instructor–led basic life support (BLS) courses [13,14].

Although it is apparent that a standardized framework for systematic quality control and quality assurance for educational resources on resuscitation is necessary [10,15], no unified tool for evaluating the quality of educational content on resuscitation is currently available. This study aimed to develop an expert consensus–based checklist for appraising the quality of educational resources on lay-rescuer delivery of adult BLS.

METHODS

The Delphi survey technique, a method of obtaining general consensus on a particular topic based on expert opinions collected through a series of structured questionnaires or “rounds,” was used taking into account published practical guidance [16,17].

Expert recruitment

An informational letter explaining the study design and its aims was sent to experts, who were invited to participate through the European Resuscitation Council (ERC) Research NET, an international, interdisciplinary, and interprofessional group for the study of cardiac arrest and resuscitation [18]. Prospective participants were asked in an online questionnaire to provide data on their field of specialization, highest academic degree, number of years of professional experience in resuscitation, availability of provider or instructor certification(s) in CPR, familiarity with international resuscitation guidelines (self-rated on a 10-point Likert scale from 1 [not familiar at all] to 10 [have a thorough knowledge of]), prior participation in Delphi studies, country of residence, and affirm their willingness to participate by completing an electronic consent form using a Google form (Google LLC). All participating experts were asked if they wished to be acknowledged in the final publication. No limitations to the number or geographic location of participants were applied. Participating experts were blinded to each other’s participation throughout the study.

Delphi procedure

A two-round Delphi exercise was carried out to create a consensus-based checklist. Each round was conducted over a 2-week period. Within each round, two email reminders were sent to nonresponders (on day 5 and day 11). If no response was received from an expert within the 2-week period, one additional attempt to obtain results was made immediately after the deadline in the form of a third email reminder.

In the first round, experts were asked via email to review a baseline checklist (Supplementary Material 1) and complete an offline questionnaire (Microsoft Excel table, Microsoft Corp), rating each checklist item by answering the following question “How much do you agree that this item should be utilized as part of the checklist for evaluating conformance of an adult BLS educational resource with resuscitation guidelines?” For the rating, a 9-point Likert scale was applied ranging from 1 (totally disagree) to 9 (totally agree). Experts were encouraged to add anonymous free-text comments and suggestions for modifying, removing, or adding new checklist items.

The baseline checklist (Supplementary Material 1) [12] was based on the 2020 International Consensus on CPR [19], ERC Guidelines 2021 [20], and ERC COVID-19 Guidelines [21] as a rework of the original structured 36-item checklist by Jensen et al. [14]. The baseline checklist contained 72 items grouped in 11 thematic subsections, including “safety” (one item), “recognition” (10 items), “call for help” (four items), “chest compressions” (11 items), “rescue breathing” (12 items), “defibrillation” (eight items), “continuation of CPR” (two items), “recovery position” (three items), “choking” (10 items), “COVID-19” (six items), and “miscellaneous” (five items).

Results of the first round were analyzed by applying the following criteria. Items that received the rating of ≥7 points from ≥75% of experts were considered to have reached the consensus threshold for inclusion. Items on which expert consensus was achieved were subjected to a second round of evaluation without change, even if some suggestions for modification had been made. Items that received the rating of ≤3 points from ≥75% of experts were considered to have reached a consensus threshold for exclusion and were excluded at this step. Items that did not reach a consensus threshold for either inclusion or exclusion were modified according to experts’ comments (if a comment provided clear direction on how to modify an item) and carried forward to the second round. New items were added to the checklist when experts provided clear directions on how to formulate them. After the analysis, all experts received a personalized report with quantitative expert-group results, including each item’s median, lowest, and highest ratings, their own ratings, and a summary of all modifications and anonymous comments. In the second round, all experts who completed the first round were contacted by email with a request to review the adjusted checklist and complete a questionnaire by rating each checklist item (including new items) on the same scale, considering their previous rating, expert-group rating, all comments, and modifications to the checklist in the first round.

The results were analyzed after the second round was closed. Items that received a rating of ≥7 points from ≥75% of experts were considered to have reached the consensus threshold for inclusion and were added to the final checklist. All other items were excluded. After the analysis, all experts received a report with the final results.

Data that support findings of this study, including tables with a summary of anonymized expert ratings and comments, calculated quantitative expert-group results, modifications to the checklist, and blank questionnaire forms, are openly available in the Mendeley Data repository as a dataset [22]. As the study used nonsensitive and anonymized data, it did not require ethical approval and received an institutional review board exemption.

Statistical analysis

Data analysis was carried out with IBM SPSS ver. 26 (IBM Corp) and involved descriptive statistics (median, interquartile range [IQR], and absolute and relative values).

RESULTS

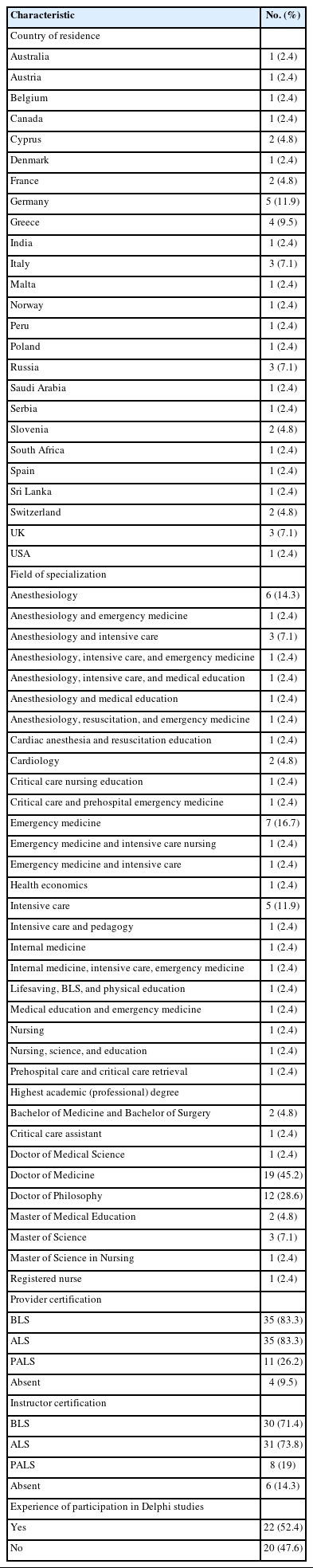

A total of 46 individuals initially agreed to participate in the study. Of these, 42 (91.3%) completed the first round (in November 2022) and 40 (87.0%) completed the second round (in December 2022). Characteristics of participants who completed the first round are provided in Table 1. The expert group represented 25 countries on six continents. Most participants (n=35, 83.3%) reported specializing in anesthesiology and/or intensive (critical) care and/or emergency medicine. Almost a third (31.0%) held a research doctoral degree (n=12) or a higher-level doctorate (n=1). The number of years of professional experience in resuscitation varied from 2 to 40 years (median, 16 years; IQR, 10–28 years). Most participants (n=35, 83.3%) were certified as BLS providers and 30 (71.4%) were BLS instructors. The median self-rating of familiarity with international resuscitation guidelines (American Heart Association Guidelines, ERC Guidelines, International Consensus on CPR) on a 10-point scale was 9 (IQR, 9–10). Previous participation in a Delphi study was reported by 22 participants (52.4%).

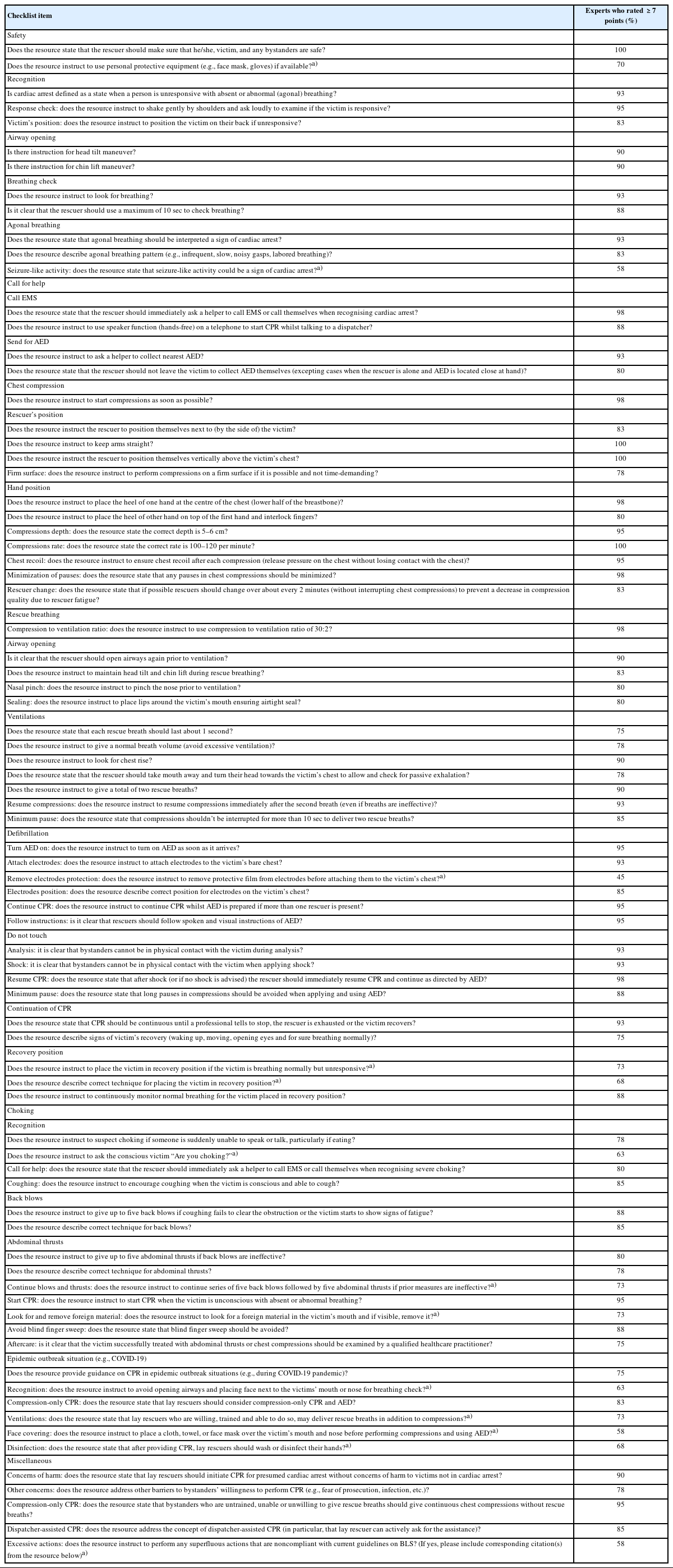

In the first round, the median percentage of experts who assigned an item a rating of ≤3 points was 5% (IQR, 2%–7%; range, 0%–38%) and the median percentage of experts who gave an item ≥7 points was 83.0% (IQR, 76%–90%; range, 40%–100%). No items reached the consensus threshold for exclusion in the first round, 57 items reached the consensus threshold for inclusion and were carried forward to the second round unchanged, and 15 items did not reach the consensus threshold for inclusion or exclusion and were subjected to the second round. Of those 15 items, nine items were modified according to experts’ comments (see dataset [22]). Following experts’ suggestions, seven new items were added to the checklist.

The second round began with 79 items. In that round, the median percentage of experts who assigned an item a rating of ≤3 points was 5% (IQR, 0%–10%; range, 0%–28%) and the median percentage of experts who gave an item ≥7 points was 85% (IQR, 78%–93%; range, 45%–100%). The participating experts agreed to accept the majority of the items covering essential components of adult BLS, including safety considerations, recognition of cardiac arrest, call for help, chest compressions and rescue breathing techniques, use of an automated external defibrillator, help in choking, and miscellaneous questions. In the second round, 66 items reached the consensus threshold for inclusion (Table 2) (see dataset [22]) and the other 13 items did not reach the consensus threshold for inclusion and were excluded. Of these 13 items, two and four items represented essential elements and the majority of items of the “recovery position” and “epidemic outbreak situations” (originally, COVID-19) subsections of the checklist, respectively. A decision was therefore made to exclude these subsections (including three items that reached consensus for inclusion). Consequently, 63 items were retained for the final checklist (Supplementary Material 2), including 55 unchanged baseline items, four modified items and four new items, under the subsections “safety” (one item), “recognition” (nine items), “call for help” (four items), “chest compressions” (12 items), “rescue breathing” (12 items), “defibrillation” (nine items), “continuation of CPR” (two items), “choking” (10 items), and “miscellaneous” (four items).

DISCUSSION

Educational efficiency and implementation of resuscitation science are key determinants of survival after cardiac arrest [23]. Turning scientific evidence into practice, in turn, depends on the effectiveness of translating knowledge drawn from resuscitation guidelines through education [8,24].

Although considerable efforts are being made by resuscitation researchers to improve educational efficiency by implementing optimal instructional designs and strategies for teaching resuscitation [6,8], relatively little attention has been paid to quality control of the educational content [15]. Studies have shown that training programs and educational resources on resuscitation commonly do not comply with relevant guidelines, omit core evidence-based recommendations, or incorrectly present essential learning elements [10–14]. This suggests a need to address the issue systematically by establishing a standardized international framework for quality control and quality assurance in resuscitation education [10].

The current study is a step toward realizing this goal. It represents the first attempt to generate a checklist for appraising the quality of educational resources on BLS using an international expert opinion consensus achieved through the Delphi technique. The use of a validated consensus-generating method, involvement of a large number of experts with extensive experience in resuscitation, and high participation rate support the robustness of the study results.

A blank template for “The ERC Research NET structured checklist for quality appraisal of educational resources on adult basic life support” is available online [22]. We propose using the checklist to ensure full coverage of essential issues of lay-rescuer delivery of adult BLS in a syllabus of resuscitation-training resources and to guarantee adherence of educational content to state-ofthe-art understanding of effective techniques for resuscitation. The checklist could be utilized by resource developers designing new educational programs and materials, or to bring existing resources into agreement with current evidence-based resuscitation knowledge. The checklist is a ready-to-use tool for conducting research involving expert-led systematic evaluation of the quality of face-to-face courses or electronic training resources on BLS (including online courses, videos, and mobile apps) in terms of compliance with resuscitation guidelines. In particular, such research could help create a collection of reliable, free-of-charge, web-based, or downloadable multimedia resources that could be recommended for mass distribution and therefore contribute to improve worldwide availability and dissemination of high-quality public education on resuscitation.

Future steps include testing of the checklist for interrater and intrarater reliability, translation of the checklist into different languages, and updating the checklist as new resuscitation research evidence becomes available. The expert consensus procedure employed in this study could be used to produce similar instruments for appraising educational programs and resources on pediatric BLS, adult and pediatric ALS, and various aspects of first aid.

This study has limitations to be acknowledged. Given that the checklist was based on the International Consensus on CPR [19] and the ERC Guidelines [20,21], its content may not correspond fully with national guidelines that have country-specific peculiarities. Therefore, before using the checklist to evaluate conformance of adult BLS educational resources with national guidelines, the checklist may need to be adjusted accordingly.

In summary, this study utilized a validated expert consensus technique to create a 63-item structured checklist for appraising the quality of educational content on lay-rescuer delivered adult BLS. Widespread use of the checklist by developers of educational programs and resources on BLS should improve compliance with current evidence-based knowledge on resuscitation and contribute to enhanced educational efficiency. The checklist could be incorporated into a standardized international framework for quality control and quality assurance in resuscitation education.

SUPPLEMENTARY MATERIAL

Supplementary materials are available from https://doi.org/10.15441/ceem.23.049.

Supplementary Material 1.

. Baseline checklist for evaluating educational resources on lay rescuer adult BLS in terms of compliance with international resuscitation guidelines.

Supplementary Material 2.

. Final expert consensus-based checklist for evaluating educational resources on lay rescuer adult BLS in terms of compliance with international resuscitation guidelines.

Notes

ETHICS STATEMENT

Not applicable.

CONFLICT OF INTEREST

Bernd W. Böttiger is board member and treasurer of the European Resuscitation Council (ERC), chairman of the German Resuscitation Council (GRC), federal state doctor of the German Red Cross, member of the Advanced Life Support (ALS) Task Force of the International Liaison Committee on Resuscitation (ILCOR), board member of the German Interdisciplinary Association for Intensive Care and Emergency Medicine (DIVI), founder of the German Resuscitation Foundation, founder of the ERC Research NET, co-editor of Resuscitation, editor of Notfall+Rettungsmedizin, and co-editor of the Brazilian Journal of Anesthesiology. He received fees for lectures from the following companies: Forum für medizinische Fortbildung (FomF), Baxalta Deutschland GmbH, ZOLL Medical Deutschland GmbH, C.R. Bard GmbH, GS Elektromedizinische Geräte G. Stemple GmbH, Novartis Pharma GmbH, Philips GmbH Market DACH, and Bioscience Valuation BSV GmbH. No other potential conflict of interest relevant to this article was reported.

FUNDING

None.

AUTHOR CONTRIBUTIONS

Conceptualization: AB; Data curation: all authors; Formal analysis: AB, AG; Investigation: all authors; Methodology: AB, AG, BWB; Project administration: AB, BWB; Supervision: AB; Validation: AB, AG; Writing–original draft: AB; Writing–review & editing: AG, BWB, the Delphi Study Investigators. All authors read and approved the final manuscript.

Acknowledgements

The full list of the Delphi Study Investigators (in alphabetical order): Abdulmajeed Solaiman Khan (Saudi Resuscitation Council, Pan Arab Resuscitation Council, Mecca, Saudi Arabia); Ahmed Elshaer (Department of Accident and Emergency, University Hospital Ayr, Ayr, Scotland, UK); Amber V. Hoover (American Heart Association, Dallas, TX, USA); Anastasia Spartinou (School of Medicine, University of Crete, Giofirakia, Greece; Emergency Department, University Hospital of Heraklion, Heraklion, Greece); Andrea Scapigliati (Università Cattolica S. Cuore, Institute of Anesthesiology and Intensive Care, Fondazione Policlinico Universitario A. Gemelli, IRCCS, Rome, Italy); Artem Kuzovlev (Federal Research and Clinical Center of Intensive Care Medicine and Rehabilitology, Moscow, Russia); Baljit Singh (Department of Anesthesiology, Faculty of Medicine and Health Sciences, SGT University, Gurugram, India); Clare Morden (Department of Intensive Care Medicine, Salisbury District Hospital, Salisbury, UK); Cristian Abelairas-Gómez (CLINURSID Research Group; Faculty of Education Sciences, Universidade de Santiago de Compostela, Santiago de Compostela, Spain); Daniel Meyran (Bataillon de Marins Pompiers, Groupement santé, Marseille, France); Daniel Schroeder (Central Hospital of the German Armed Forces, Koblenz, Germany); Daniil O. Starostin (Federal Research and Clinical Center of Intensive Care Medicine and Rehabilitology, Moscow, Russia); David Stanton (Netcare, Johannesburg, South Africa); Eirik Alnes Buanes (Haukeland University Hospital, Bergen, Norway); Ekaterina A. Boeva (Federal Research and Clinical Center of Intensive Care Medicine and Rehabilitology, Moscow, Russia); Enrico Baldi (Division of Cardiology, Fondazione IRCCS Policlinico San Matteo, Pavia, Italy); Evanthia Georgiou (Education Sector, Nursing Services, Ministry of Health, Nicosia, Cyprus); Jacqueline Eleonora Ek (Emergency Department, Mater Dei Hospital, Malta); Jan Breckwoldt (University Hospital Zurich, Institute of Anesthesiology, Zurich, Switzerland); Jan Wnent (University Hospital Schleswig-Holstein, Institute for Emergency Medicine, Kiel, Germany); Jessica Grace Rogers (Physician Response Unit, London’s Air Ambulance, Barts Health NHS Trust, London, UK); Kasper G. Lauridsen (Department of Medicine, Randers Regional Hospital, Randers, Denmark); Lukasz Szarpak (Institute of Outcomes Research, Maria Sklodowska-Curie Medical Academy, Warsaw, Poland); Marios Georgiou (American Medical Center, Nicosia, Cyprus); Nadine Rott (Department of Anesthesiology and Intensive Care Medicine, University Hospital of Cologne, Cologne, Germany; German Resuscitation Council, Ulm, Germany); Nilmini Wijesuriya (Colombo North Teaching Hospital, Ragama, Sri Lanka); Nino Fijačko (University of Maribor, Faculty of Health Sciences, Maribor, Slovenia); Olympia Nikolaidou (EMS-National Center for Emergency Care (EKAB), Thessaloniki, Greece); Pascal Cassan (International Federation Of Red Cross and Red Crescent National Societies, Paris, France); Peter Paal (Department of Anesthesiology and Intensive Care Medicine, St. John of God Hospital, Paracelsus Medical University, Salzburg, Austria); Peter T. Morley (University of Melbourne, Royal Melbourne Hospital, Melbourne, Australia); Raffo Escalante-Kanashiro (Instituto Nacional de Salud del Niño, Lima, Peru); Robert Greif (University of Bern, Bern, Switzerland; School of Medicine, Sigmund Freud University Vienna, Vienna, Austria); Sabine Nabecker (Department of Anesthesiology and Pain Medicine, Sinai Health System, University of Toronto, Toronto, Canada); Simone Savastano (Division of Cardiology, Fondazione IRCCS Policlinico San Matteo, Pavia, Italy); Theodoros Aslanidis (Intensive Care Unit, St. Paul (“Agios Pavlos”) General Hospital, Thessaloniki, Greece); Violetta Raffay (Department of Medicine, School of Medicine, European University Cyprus, Nicosia, Cyprus); Vlasios Karageorgos (School of Medicine, University of Crete, Giofirakia, Greece; Department of Anesthesiology, University Hospital of Heraklion, Heraklion, Greece); Wolfgang A. Wetsch (Department of Anesthesiology and Intensive Care Medicine, University Hospital of Cologne, Faculty of Medicine, University of Cologne, Cologne, Germany); Željko Malić (First Aid Department, Slovenian Red Cross, Ljubljana, Slovenia).

References

Article information Continued

Notes

Capsule Summary

What is already known

The educational content of resuscitation-training resources commonly does not adhere to relevant guidelines, which define the state-of-the-art in resuscitation knowledge and recommend evidence-based practices for the management of cardiac arrest. While it is apparent that a standardized framework for systematic quality control and quality assurance for educational resources on resuscitation is necessary, no unified tool for evaluating the quality of educational content on resuscitation currently exists.

What is new in the current study

This study represents the first attempt to generate a checklist for appraising the quality of educational resources on basic life support based on an international expert opinion consensus achieved through the Delphi technique. The checklist could serve as a constituent element of a prospective standardized international framework for quality control and quality assurance in resuscitation education.